AI adventures in arts and letters – TechCrunch

[ad_1]

There’s more AI news out there than anyone can possibly keep up with. But you can stay tolerably up to date on the most interesting developments with this column, which collects AI and machine learning advancements from around the world and explains why they might be important to tech, startups or civilization.

To begin on a lighthearted note: The ways researchers find to apply machine learning to the arts are always interesting — though not always practical. A team from the University of Washington wanted to see if a computer vision system could learn to tell what is being played on a piano just from an overhead view of the keys and the player’s hands.

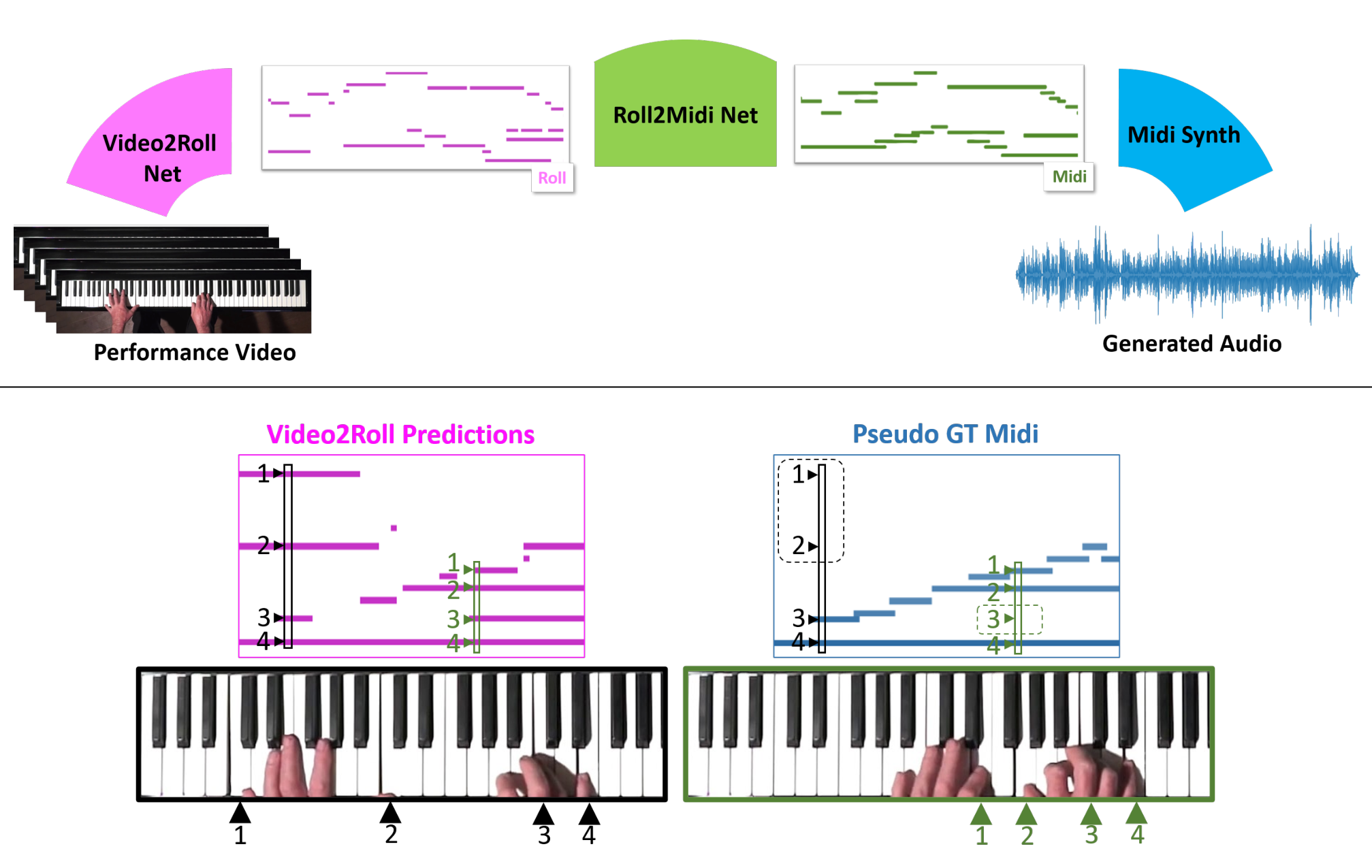

Audeo, the system trained by Eli Shlizerman, Kun Su and Xiulong Liu, watches video of piano playing and first extracts a piano-roll-like simple sequence of key presses. Then it adds expression in the form of length and strength of the presses, and lastly polishes it up for input into a MIDI synthesizer for output. The results are a little loose but definitely recognizable.

“To create music that sounds like it could be played in a musical performance was previously believed to be impossible,” said Shlizerman. “An algorithm needs to figure out the cues, or ‘features,’ in the video frames that are related to generating music, and it needs to ‘imagine’ the sound that’s happening in between the video frames. It requires a system that is both precise and imaginative. The fact that we achieved music that sounded pretty good was a surprise.”

Another from the field of arts and letters is this extremely fascinating research into computational unfolding of ancient letters too delicate to handle. The MIT team was looking at “locked” letters from the 17th century that are so intricately folded and sealed that to remove the letter and flatten it might permanently damage them. Their approach was to X-ray the letters and set a new, advanced algorithm to work deciphering the resulting imagery.

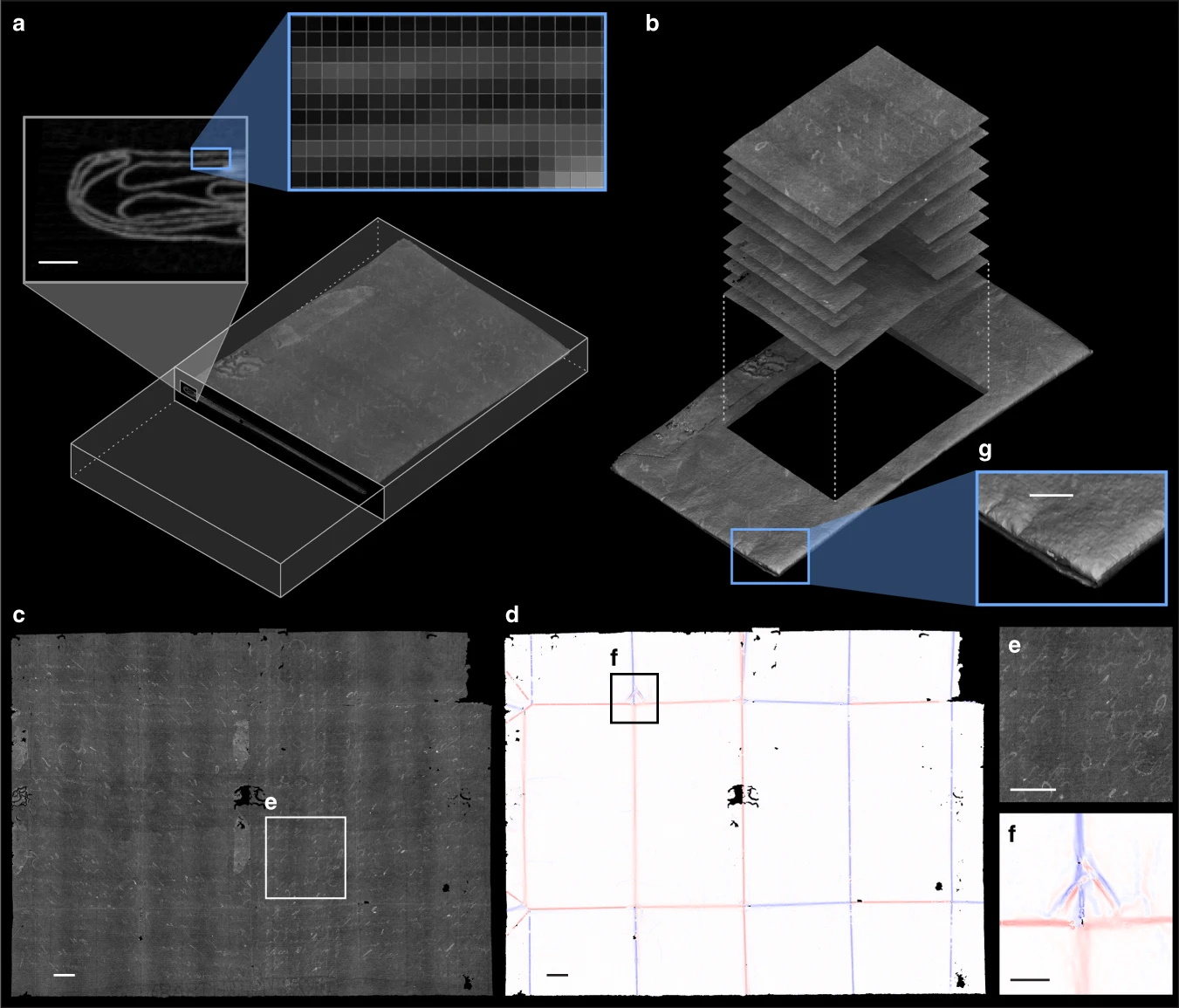

Diagram showing X-ray views of a letter and how it is analyzed to virtually unfold it. Image Credits: MIT

“The algorithm ends up doing an impressive job at separating the layers of paper, despite their extreme thinness and tiny gaps between them, sometimes less than the resolution of the scan,” MIT’s Erik Demaine said. “We weren’t sure it would be possible.” The work may be applicable to many kinds of documents that are difficult for simple X-ray techniques to unravel. It’s a bit of a stretch to categorize this as “machine learning,” but it was too interesting not to include. Read the full paper at Nature Communications.

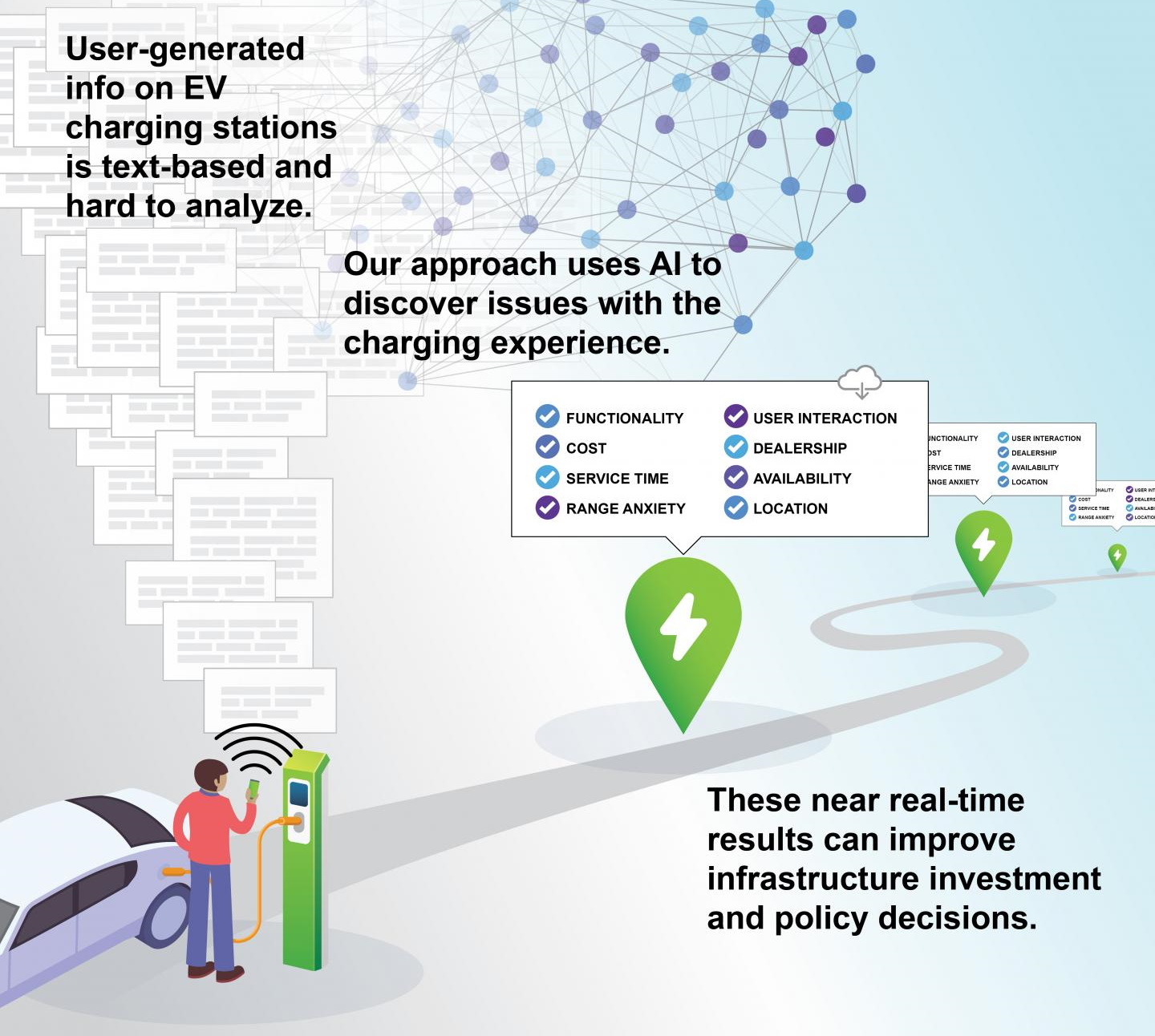

You arrive at a charge point for your electric car and find it to be out of service. You might even leave a bad review online. In fact, thousands of such reviews exist and constitute a potentially very useful map for municipalities looking to expand electric vehicle infrastructure.

Georgia Tech’s Omar Asensio trained a natural language processing model on such reviews and it soon became an expert at parsing them by the thousands and squeezing out insights like where outages were common, comparative cost and other factors.

[ad_2]

Source link